I am currently working on a project in which I want to program a German to Bavarian translator using machine learning. This is called Natural Language Processing (NLP). A Google Library called Tensorflow is often used for the implementation. There is Tensorflow.js as well as Tensorflow (Python). Since I develop professionally with Angular and therefore I am familiar with TypeScript and JavaScript, I initially decided to use the NLP application in Tensorflow.js. I was naive enough to assume that the only difference between the two libraries would be the programming language used. This is definitely not the case! For my NLP project, some basic functions are missing in Tensorflow.js (such as a tokenizer). In this post I explained the general differences between Tensorflow.js and Tensorflow (Python).

I spent many evenings trying to get my project to work with Tensorflow.js and failed in the end. Switching to Python brought the breakthrough I was hoping for! I would recommend everyone to use Python for NLP applications! Nevertheless, in this article I want to explain the differences between Tensorflow.js and Tensorflow in relation to my project using code examples. In between, I will also incorporate my newly accumulated knowledge into the respective sections as best I can.

You might also be interested in: NLP application part 2 (OOV token, padding, creating the model and training the model).

Reading in data

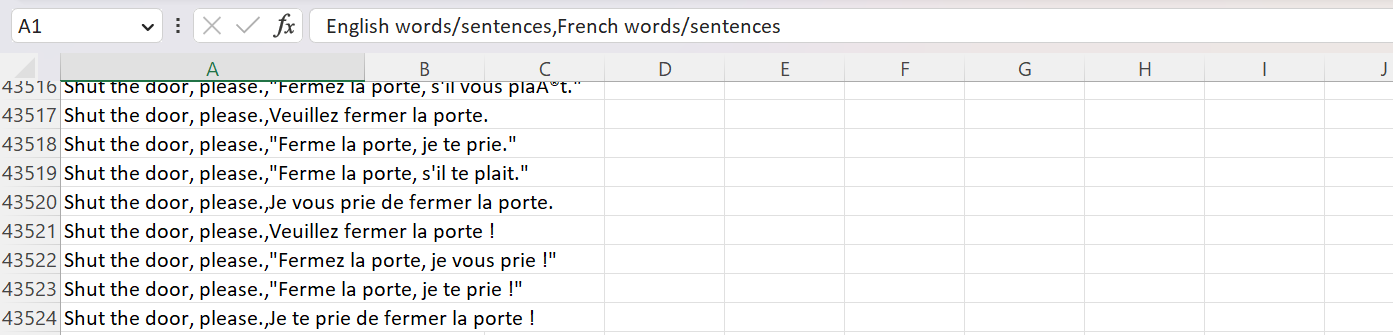

First of all, you need a data set with which the model will be trained later. Here I can recommend https://www.kaggle.com/. There you find a large number of data sets for free use and even some code examples. You can either read in the data set via a link or download it and then read it in locally from the file system. A good data set should contain over 100,000 examples. Preferably also whole paragraphs. For example, this is what an English/French data set looks like as a CSV:

First, the simple variant using Python:

import pandas as pd

# read in dataSet for training

df = pd.read_csv("./dataset/eng_-french.csv")

df.columns = ["english", "french"]

print(df.head())

print(df.info())

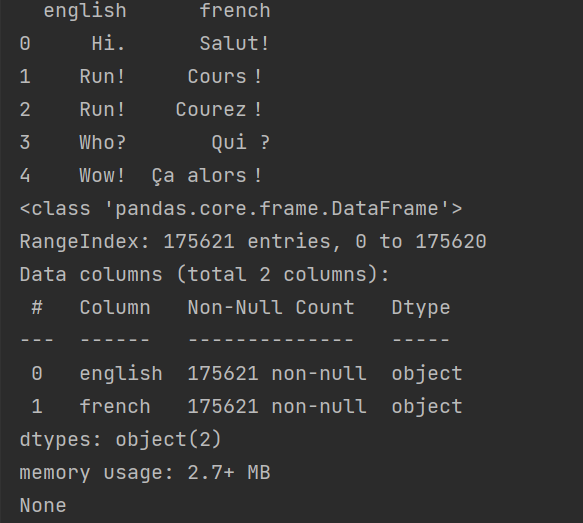

We use the pandas library and read in the CSV with it. With the head() we can test if it worked and display the first 5 rows. With info() we get more information like number of columns and number of rows:

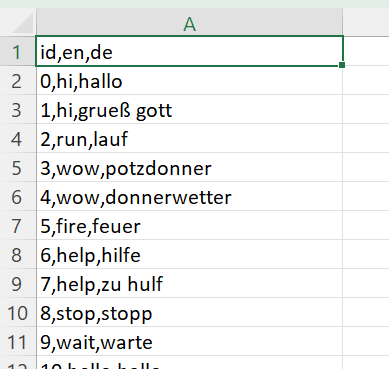

For comparison in Tensorflow.js (Tfjs) there is also a possibility to read in CSV:

const tf = require("@tensorflow/tfjs");

async function readInData() {

await tf.ready();

const languageDataSet = tf.data.csv("file://" + "./ger_en_trans.csv");

// Extract language pairs

const dataset = languageDataSet.map((record) => ({

en: record.en,

de: record.de,

}));

const pairs = await dataset.toArray();

console.log(pairs);

}

readInData();

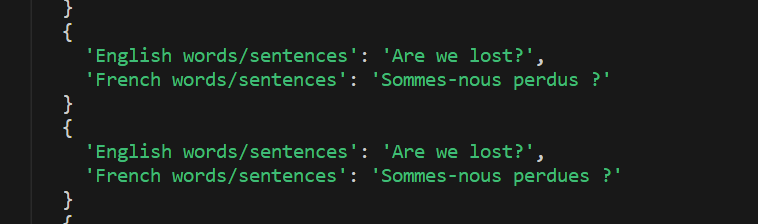

I tried at first to read in the same data set as in the Python version:

Afterwards I wanted to shorten the headings in the original CSV, but this strangely gave me an error message when reading in. Even when I restored the CSV to its original state, the error remained:

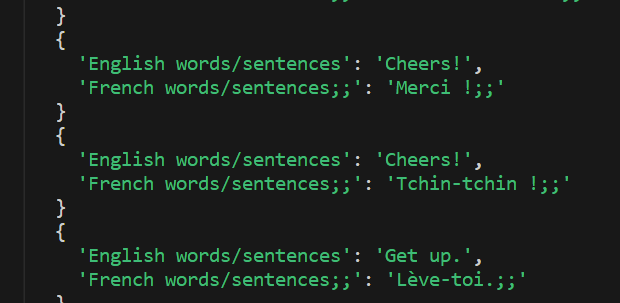

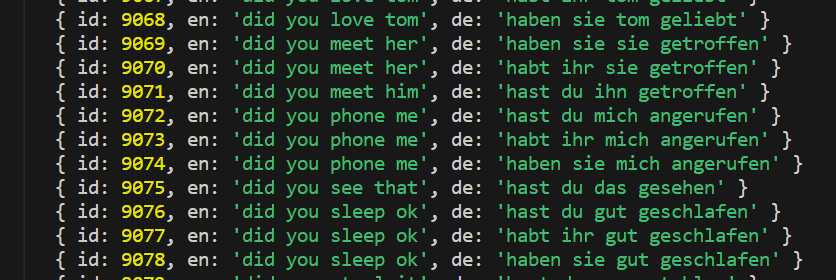

In the end, I decided to use a different data set:

This one was also much more readable when it was read in:

And here is the final result after the mapping:

Although Tfjs offers an extra function to read in the CSV, I still had more trouble than in the Python version. I have also not found a quick way to read in a data set in txt format. However, txt files are widespread!

Prepare data

I have often seen that a cleaning function was written for data preparation and that the output set also received a start and end token. I then wondered whether the input set, i.e. the encoder, also needs a start and end token. In the context of sequence-to-sequence models, however, the encoder does not need explicit start and end tokens. Its purpose is to process the input sequence as it is and produce a representation of the input.

The decoder, on the other hand, which generates the output sequence, usually benefits from the use of start and end tokens. These tokens help to mark the beginning and end of the generated sequence. The use of start and end tokens is therefore specific to the decoder. During training, the input sequence of the decoder includes a start token at the beginning and excludes an end token at the end. The output sequence of the decoder contains the end token and excludes the start token. In this way, the model learns to generate the correct output sequence based on the input.

When creating translations with the trained model, you start with the start token and generate one token after another until you hit the end token or reach a maximum sequence length. Adding start and end tokens to the decoder set improves the performance of the NLP translator model. It helps to establish clear sequence boundaries and supports the generation process by indicating where the translation starts and ends.

In summary:

- Encoder: No need for start and end tokens. Processes the input sequence as it is.

- Decoder: Start and end tokens are helpful for generating the output sequence.

We start again with the easy part, namely Python. We want to clean up the data we read in. This means converting everything to lower case and removing characters that are not part of the alphabet or punctuation marks. For this we need the regex library (re).

import re

def clean(text):

text = text.lower() # lower case

# remove any characters not a-z and ?!,'

# please note that french has additional characters...I just simplified that

text = re.sub(u"[^a-z!?',]", " ", text)

return text

# apply cleaningFunctions to dataframe

data["english"] = data["english"].apply(lambda txt: clean(txt))

data["french"] = data["french"].apply(lambda txt: clean(txt))

# add <start> <end> token to decoder sentence (french)

data["french"] = data["french"].apply(lambda txt: f"<start> {txt} <end>")

print(data.sample(10))

I have simplified here. Since this is a French data set, one should actually write an extra cleaning function that also takes French letters like “ê” into account. The sample() function only serves to illustrate the data:

In Tfjs the process is absolutely identical. I have created a cleanData() function and modified the previous code:

function cleanData(text) {

//if necessary also remove any characters not a-z and ?!,'

return text.toLowerCase();

}

const dataset = languageDataSet.map((record) => ({

en: cleanData(record.en),

de: "startToken " + cleanData(record.de) + " endToken",

}));

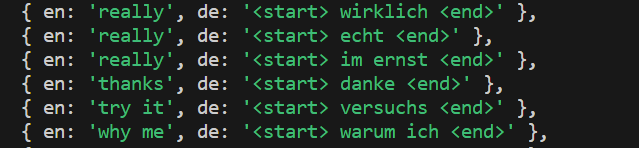

The result is therefore also identical to the Python approach:

If the words “start” and “end” are part of regular sentences and are not used as special tokens to mark the beginning and end of sequences, then they should definitely not be replaced by corresponding indices during tokenisation. When tokenising, it is important to choose special tokens that are unlikely to occur in the actual input data. This ensures that the model can distinguish them from normal words and learns to produce the appropriate output sequences.

If the words ” start” and “end” are regular words in the input sentences, consider using different special tokens to mark the start and end of sequences. A common choice is ” <start>” and “<end>”. Using special tokens that are unlikely to be part of the regular vocabulary can ensure that they can be correctly identified and processed by the model during training and generation.

For example, the tokenised sequences would look like this:

- Decoder Input: [“<start>”, “hello”, “world”]

- Decoder Output: [“hello”, “world”, “<end>”]

Therefore AVOID the following:

- Decoder Input: [“start”, “hello”, “world”]

- Decoder output: [“hello”, “world”, “end”]

Tokenisation

# tokenization

import tensorflow as tf

from tensorflow import keras

from keras.preprocessing.text import Tokenizer

import numpy as np

# english tokenizer

english_tokenize = Tokenizer(filters='#$%&()*+,-./:;<=>@[\\]^_`{|}~\t\n')

english_tokenize.fit_on_texts(data["english"])

num_encoder_tokens = len(english_tokenize.word_index)+1

# print(num_encoder_tokens)

encoder = english_tokenize.texts_to_sequences(data["english"])

# print(encoder[:5])

max_encoder_sequence_len = np.max([len(enc) for enc in encoder])

# print(max_encoder_sequence_len)

# french tokenizer

french_tokenize = Tokenizer(filters="#$%&()*+,-./:;<=>@[\\]^_`{|}~\t\n")

french_tokenize.fit_on_texts(data["french"])

num_decoder_tokens = len(french_tokenize.word_index)+1

# print(num_decoder_tokens)

decoder = french_tokenize.texts_to_sequences(data["french"])

# print(decoder[:5])

max_decoder_sequence_len = np.max([len(dec) for dec in decoder])

# print(max_decoder_sequence_len)

This code performs tokenisation and sequence preprocessing with the Tokenizer class in TensorFlow.

- english_tokenize = Tokenizer(filters=’#$%&()*+,-./:;<=>@[\]^_`{|}~\t\n’) Initialises a tokenizer object for English sentences. The filters parameter specifies characters to be filtered out during tokenisation. We have already filtered the data in the cleaning process, so it is not really necessary to filter again here.

- english_tokenize.fit_on_texts(data[“english”]) Updates the internal vocabulary of the tokenizer based on the English sentences in the variable data. Each word in the vocabulary is assigned a unique index.

- num_encoder_tokens = len(english_tokenize.word_index) + 1 Determines the number of unique tokens (words) in the English vocabulary. The word_index attribute of the tokeniser returns a dictionary that maps words to their respective indices.

- encoder = english_tokenize.texts_to_sequences(data[“english”]) Converts the English sentences in the variable data into sequences of token indices using the tokenizer. Each sentence is replaced by a sequence of integers representing the corresponding words.

- max_encoder_sequence_len = np.max([len(enc) for enc in encoder]) Calculates the maximum length (number of tokens) among all encoded sequences. It uses the max function of NumPy to find the maximum value in a list comprehension.

These steps help to prepare the sentences for further processing in an NLP model. This is necessary for both languages!

The sentences have now been tokenised, then converted into sequences of token indices and the maximum sequence length determined. An example sentence from the dataset now looks like this: [[148], [252], [59], [14], [111]]. Here, 148 could stand for “I”, 252 for “am”, 59 for “very”, 14 for “hungry” and 111 for “now”.

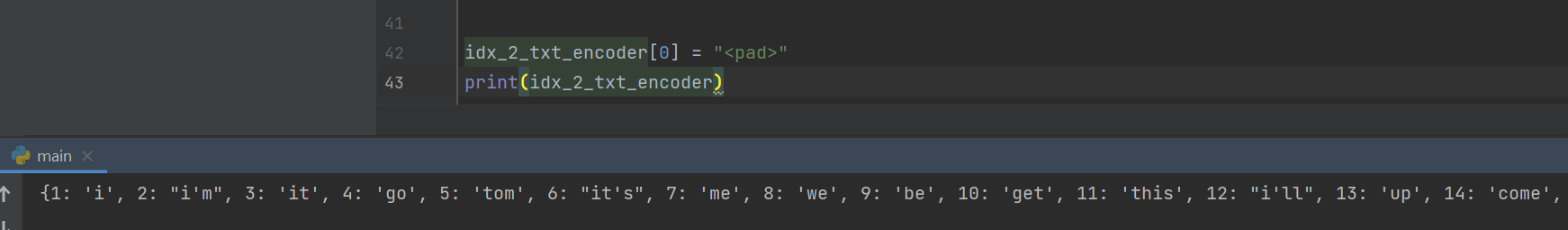

idx_2_txt_decoder = {k: i for i, k in french_tokenize.word_index.items()}

# print(idx_2_txt_decoder)

idx_2_txt_encoder = {k: i for i, k in english_tokenize.word_index.items()}

# print(idx_2_txt_encoder)

idx_2_txt_decoder[0] = "<pad>"

idx_2_txt_encoder[0] = "<pad>"

The code snippet idx_2_txt_encoder = {k: i for i, k in english_tokenize.word_index.items()} creates a dictionary directory idx_2_txt_encoder that maps token indices to the corresponding words in the English vocabulary: {k: i for i, k in english_tokenize.word_index.items()} is a dictionary that iterates over the key-value pairs in english_tokenize.word_index. At each iteration, the key (k) represents a word in the vocabulary, and the value (i) represents the corresponding index. Understanding creates a new dictionary whose keys are the indices (i) and the values are the words (k).

The resulting idx_2_txt_encoder – dictionary allows you to look up the word corresponding to a particular index in the English vocabulary. english_tokenize.word_index, by the way, would swap the displays exactly. Here the key would be the word and the value the index. The second line, idx_2_txt_encoder[0] = “<pad>”, adds a special entry to the dictionary. Here, the word “<pad>” is assigned to index “0” to specify a padding token that is used when padding sequences.

Afterwards, one should save the dictionary directory, because later when the model has been trained and is used, the translations of the model will also be a series of indices that are transformed back into readable sentences with the help of the dictionary.

# Saving the dicitionaries

pickle.dump(idx_2_txt_encoder, open("./saves/idx_2_word_input.txt", "wb"))

pickle.dump(idx_2_txt_decoder, open("./saves/idx_2_word_target.txt", "wb"))

The same process as in Python can also be constructed for the NLP application in Tensorflow.js. Of course, you need a little more lines of code and the overall workload is higher. The first hurdle here is the tokeniser. Unfortunately, unlike Tensorflow (Python), Tfjs does not have its own tokenizer. After extensive research, I luckily found the natural.WordTokenizer. I would like to point out here that a Node.js project is definitely required. Tfjs can be integrated via a <script> tag, but the natural.WordTokenizer cannot!

Another important point is that the WordTokenizer removes “<” and “>”. An output sentence “<start> I eat <end>” therefore simply becomes [‘start’, ‘I’, ‘eat’, ‘end’]. Thus the “<start>” and “<end>” tokens are no longer clearly recognisable! I have therefore replaced them in the JS code from the beginning with “startToken” and “endToken”.

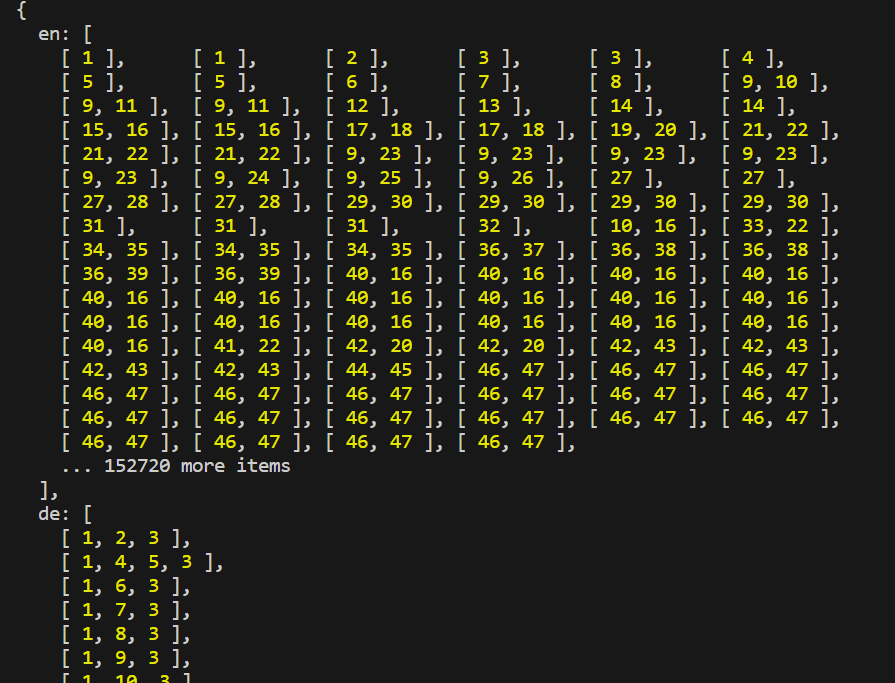

First, we tokenise every single sentence from the dataset again and then create a vocabulary dictionary for each of the two languages. Finally, we replace all the words with the indexes from the vocabulary dictionary:

const natural = require("natural");

function tokenize(data) {

const tokenizer = new natural.WordTokenizer();

enData = data.map((row) => tokenizer.tokenize(row.en));

deData = data.map((row) => tokenizer.tokenize(row.de));

const enVocabulary = new Map();

const deVocabulary = new Map();

// Insert <pad> at index 0

enVocabulary.set("<pad>", 0);

deVocabulary.set("<pad>", 0);

const fillVocabulary = (langData, vocabMap) => {

langData.forEach((sentence) => {

sentence.forEach((word) => {

if (!vocabMap.has(word)) {

const newIndex = vocabMap.size;

vocabMap.set(word, newIndex);

}

});

});

};

fillVocabulary(enData, enVocabulary);

fillVocabulary(deData, deVocabulary);

// Replace words with indexes

const indexedEnData = enData.map((element) =>

element.map((word) => enVocabulary.get(word))

);

const indexedDeData = deData.map((element) =>

element.map((word) => deVocabulary.get(word))

);

return { en: indexedEnData, de: indexedDeData };

}

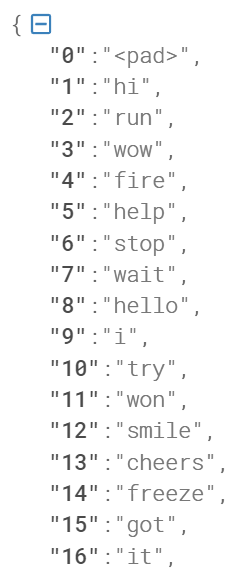

In order to be able to convert the results of our model back into words later, and in order to be able to use the model later in the real application case, we save the two vocabulary dictionaries. I have swapped the key and value pairs, but in the end this is not mandatory:

const fs = require("fs");

// store the input and output key value pairs

fs.writeFileSync(

"vocabulary/inputVocableSet.json",

JSON.stringify(switchKeysAndValues(Object.fromEntries(enVocabulary)))

);

fs.writeFileSync(

"vocabulary/outputVocableSet.json",

JSON.stringify(switchKeysAndValues(Object.fromEntries(deVocabulary)))

);

function switchKeysAndValues(obj) {

const switchedObj = {};

for (const key in obj) {

if (obj.hasOwnProperty(key)) {

const value = obj[key];

switchedObj[value] = key;

}

}

return switchedObj;

}

As a result we get a JSON object with our vocabulary:

We then return the result of our function: